Iterators, Iterable & Generators

Why do we have iterators & generators in Python

Iterators, Iterable & Generators

Building on the previous post about abstraction and encapsulation, this time I am going to write about Iterators & Generators which is where we see abstraction & encapsulation in action, within the Python realms itself.

To start talking about Iterators, we need to know what an Iterable is because that is what an iterator works upon.

What is an Iterable?

Literally, iter-able means anything you can iterate or loop over. Anything that you can use taking out one item at a time. In Python, an iterable is a collection of values that are capable of returning one element at a time. All the sequential as well as non-sequential data structures belonging to the class of collections are iterable. The examples include lists, tuples, strings, dictionaries and sets.

What is an Iterator?

As the name suggests, iter-a-tor is something that can iterate or loop over an iterable.

An iterator is an object that sits in front of an iterable, and helps iterating over an iterable whenever needed. As we can see in the snippet below, we pass the collection(list) to an iter() function which generates an iterator for us, with a built-in next() function. Whenever we call the next() function of the iterator it goes to the iterable and gets one more element. When the elements are exhausted, an exception is raised signifying to stop the iteration.

months = ['Jan', 'Feb', 'Mar', 'Apr', 'May', 'June', 'July', 'Aug', 'Sept', 'Oct', 'Nov', 'Dec']

iterator_for_months = iter(months)

while True:

try:

month = next(iterator_for_months)

print(month)

except StopIteration:

breakWhy do we need an Iterator when we have loops?

Every programming language provides loops. The idea is to go over any collection, pick up an element and do the processing until you reach the end of the collection. It is a no-brainer that this is exactly what we are trying to achieve through an iterator then why to do the same work again.

The bubble buster is, it is actually an iterator sitting beneath a for loop. A for loop does not go to the iterable directly rather it only talks to the iterator until iterator hands over the exception, and under the hood it still is the iterator that can access the iterable directly. Hence, a for loop is nothing but an infinite while loop, the only thing is the infinite while loop complexity is hidden from anyone using a much simpler for loop syntax.

You see what happened here, ABSTRACTION!

Firstly, an iterator can be generated regardless of the data type in the iterable or the type of iterable itself, hence the reusability. We can have a list, set, dictionary or any combination of data types inside them, iterator is not bothered. All it does is, pick up an element and return, continuing until the elements exist. Once exhausted, the iterator retires.

Moreover, when we use a for loop we don’t even care about the complexity of the infinite loop running inside the iterator, we are only concerned with the pythonic compact syntax of a for loop.

Iterators and Lazy Evaluation

Iterator is an object that serves an element sequentially. Instead of iterating over an existing iterable, an iterator can generate elements on its own. The best part, the iterator would not generate the whole collection all at once and consume memory for it, rather the element is generated only when it is being demanded from an iterator. This is what we call lazy evaluation. Work only when being asked to.

Given below is a code snippet showing lazy evaluation at work. The class evenNumbers is implementing an iterator. We pass a max value as an argument and keep calling the next(). On each call, the iterator will return an even number smaller than the max argument. Once we reach the max value, the iterator stops.

def check_even(number):

if number % 2 == 0:

return True

return False

class evenNumbers:

def __init__(self, max):

self.max = max

self.number = 1

def __iter__(self):

return self

def __next__(self):

self.number += 1

if self.number >= self.max:

raise StopIteration

elif check_even(self.number):

return self.number

else:

return self.__next__()The list of even numbers was not generated and stored in memory rather the number was generated only when the next() is invoked. This might not sound like much of a performance boost for small datasets but think of bigger datasets where time cost for data generation and storage cost for data storage can be significantly reduced by using iterators.

Just to have a rough idea, where a list of first five even numbers takes 40 bytes, an iterator only takes 16 bytes, offering a 2.5x reduction in storage space.

>>> iter([2,4,6,8,10]).__sizeof__()

16

>>> [2,4,6,8,10].__sizeof__()

40 What is a Generator?

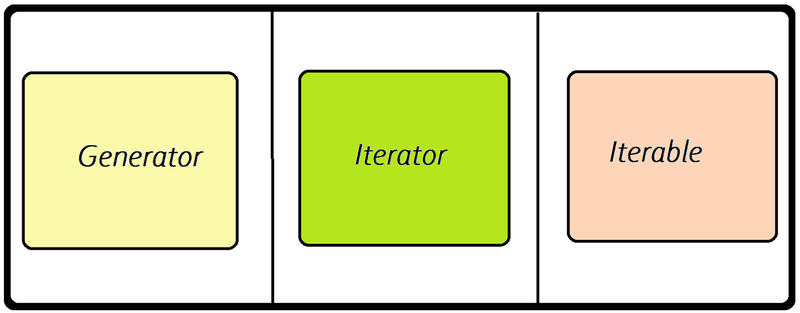

Now that we know how the lazy evaluation of iterators is so useful for us, its time to appreciate the encapsulation python provides in the form of generators.

A generator is an iterator as well. A encapsulated version of an iterator that does not have an underlying iterable rather generates elements on demand.

Why have Generators when we have Iterators?

Generators is a pythonic implementation of an iterator. Saving python users the trouble of defining a class, constructors and functions, python provides the ability to declare a generator much like a function. The only difference between a function and a generator is ‘yield’ as the replacement of ‘return’.

While a normal function will do all the computation at once and return you the final value, a generator will only yield one value and preserve its internal state, until you call the generator again and it yields the next value.

def Primes(max):

number = 1

while number < max:

number += 1

if check_even(number):

yield numberThe yield here is imitating the behavior of a next() function in an iterator. The underlying protocol is still the same, the encapsulation has provided us a much simpler interface in the form of yield.

Lastly, as always, python provides us a beautiful and compact syntax of creating a generator much like list comprehension.

evenNumbers = (i for i in range(2, 100000000000) if check_even(i))Conclusion

- Iterators in python is abstraction in action. The data type is decoupled from the iteration algorithm at all, meaning no matter the type of underlying collection, an iterator behaves the same. A much higher level of abstraction is also there in the form of for loop that still make use of underlying iterators when iterating over an iterable.

- A generator is an encapsulated iterator. A generator is the pythonic implementation of an iterator protocol where the whole of the complexity of next() is hidden under the yield keyword.

- Generators and iterators both provides considerable performance boost when used over large size datasets owing to their ability to not generate and store the data all at once, rather they do it sequentially and on demand.